The next rung on the GPU ladder

If you’re running AI jobs, you already know how much your hardware choice shapes what’s possible and what it costs. That’s why we’ve added the NVIDIA RTX 5090 to Compute. More speed, less waiting, and a fair price. Let’s get right to the numbers.

Why the 5090 joins the lineup

When we launched with 4090s, it solved a big pain: data-center GPUs like the A100 were either impossible to get or wildly overpriced. The 4090 turned out to be the sweet spot for most LLM inference and AI workloads.

But our users pushed us further. Teams wanted faster inference, better scaling, and an option to go “all in” without the energy burn. When the first batch of 5090s landed, we ran them through their paces and opened up a whole new region (UAE-2) so you could get access right away.

Benchmark highlights at a glance

We’ve run side-by-side tests using real LLM workloads. Here’s what stands out:

- 5090 slashes end-to-end latency by up to 9.6× compared to the 4090, and more than doubles the speed of the A100.

- At high loads, the 5090 delivers nearly 7 times the throughput of the 4090 and more than 2.5 times the throughput of the A100.

- Each 5090 uses energy more wisely, offering over three times the performance per watt compared to the 4090.

If you’re running small to mid-sized LLMs, the 5090 is now the fastest, most cost-effective option in Compute.

How we ran the tests

We don’t hide behind benchmarks that nobody can reproduce. Here’s our setup:

- Model: meta-llama 3.1-8B-Instruct

- Batch size: Context 8,192; output 512 tokens

- Engine: vLLM 0.8.3 (benchmark_serving.py)

- Scenarios:

- Moderate load (1 req/s, 100 prompts)

- Extreme load (1,100 req/s, 1,500 prompts)

- Regions: France, UAE-2

You can check the detailed results in our benchmark PDF. If you want a closer look at the test configs or want to run your own comparisons, just ask. We’re happy to walk you through the details.

What it means for your workload

With 5090s, anyone running LLMs up to 13B parameters can get data-center performance, without a data-center bill or a six-month waitlist. The cards scale linearly, so you can cluster them and tackle heavy workloads, or spin up one for quick experiments.

- For most inference jobs, you’ll see lower latency and better price/performance than any previous Compute option.

- Per-second billing keeps costs honest with no padding and no surprises.

When 4090s or A100s still win

Not every job needs the biggest hammer. Here’s when the 4090 or A100 might be your better pick:

- If you are training with huge models and you need more VRAM than a 5090 offers, A100 nodes still make sense.

- For jobs with massive sequence lengths or fine-tuning across cards, A100s shine.

- The 4090 is still an incredible value for smaller projects or tight budgets.

Still, we think that for most use cases, 4090s, and now 5090s, are a better choice than A100s. Check out our earlier post Why more developers are choosing RTX 4090 over A100 for more.

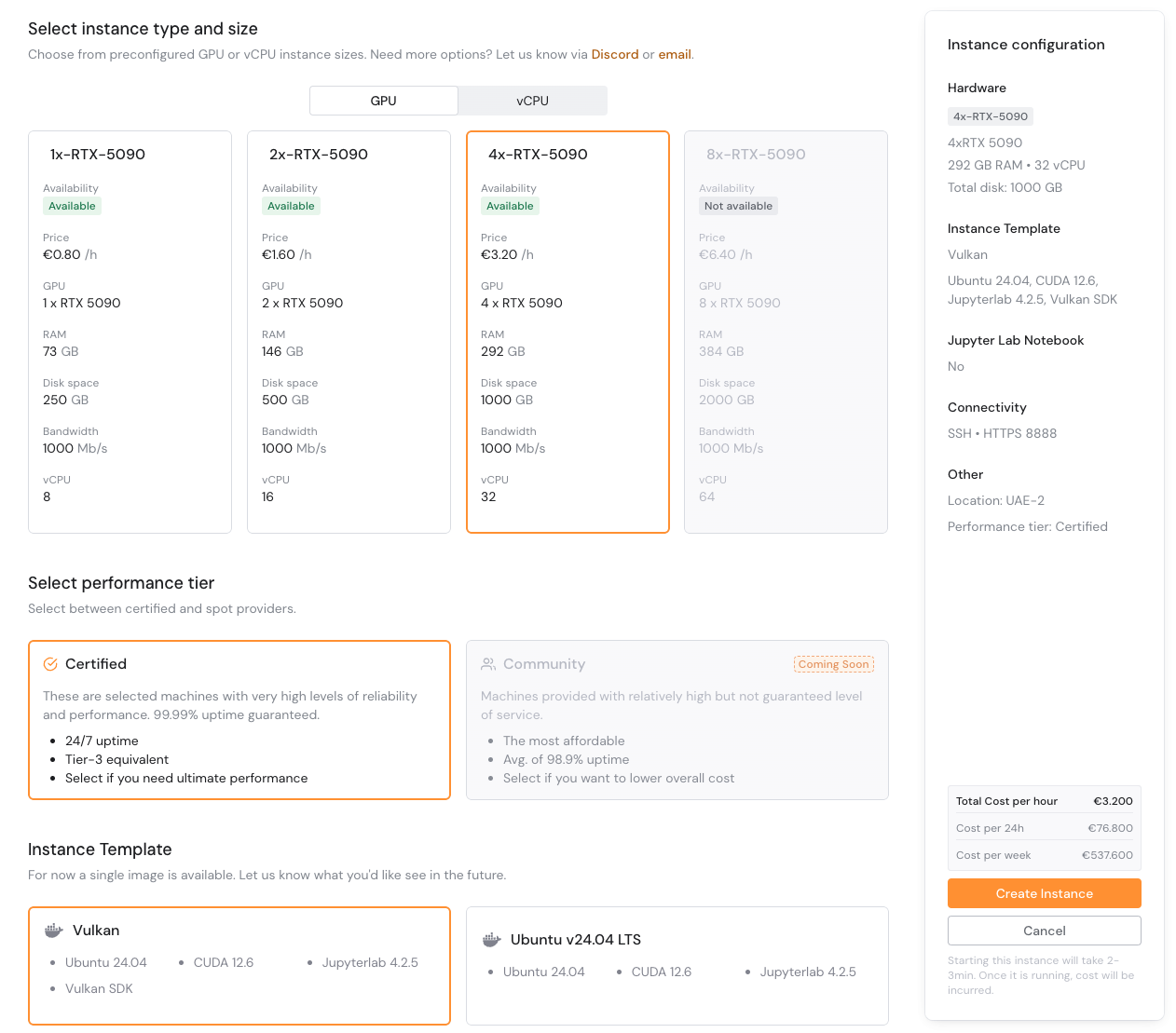

How to launch a 5090 in Compute

It’s as simple as ever:

- Log in to your Compute dashboard

- Choose the UAE-2 region

- Select GPU (5090) as your hardware

- Pick your template (or spin up your own)

- Click Launch

You’re up and running in under a minute.

Looking ahead

We’re already planning for more regions with 5090 capacity and are testing multi-GPU templates. If you’ve got feedback or want a feature, let us know. Compute is always evolving with you.