Consumer GPUs aren’t just for gaming anymore. Here’s what our tests show.

Consumer GPUs are catching up. Our latest benchmarks show that the RTX 5090 — and even the 4090 — can match or beat an A100 for small and medium LLM inference. Faster responses, higher throughput, and lower costs make them a serious option for anyone building or scaling AI workloads.

---

The A100 has long been the gold standard for high-performance inference. But in our latest benchmarks, the new RTX 5090 — and even the older 4090 — are proving that consumer-grade GPUs can hold their own. In some cases, they outperform the A100 while costing far less.

We ran inference tests on an 8B LLaMA 3.1 Instruct model using the vLLM benchmark suite and the ShareGPT dataset. The goal was simple: see how the 4090 and 5090 stack up against an A100 for small to medium LLM deployments, both in low-load (interactive) and high-load (throughput-heavy) scenarios.

The short version

- RTX 5090 beat the A100 on latency and slightly on throughput in this setup.

- Latency (1 rps): 5090 cut TTFT to ~45 ms vs ~296 ms on A100 (huge for interactive apps) and lowered end-to-end latency by ~14%.

- Throughput (heavy load): 5090 delivered ~3802 tokens/s vs ~3748 tokens/s on A100 (~1.4% higher).

- Two 5090s roughly doubled throughput to ~7604 tokens/s, about ~2× an A100 in this test.

- RTX 4090 trailed the A100 on both latency and throughput here. It’s strong for its class, but not an A100 replacement at these settings.

If you’re serving small to medium models (like an 8B) and you care about snappy first token and steady tokens/s, a single 5090 already meets or edges past an A100 in our runs. If you scale out with two 5090s, you can clear ~2× the tokens/s of a lone A100 while keeping hardware costs flexible.

That doesn’t make datacenter GPUs obsolete. VRAM still rules for larger models and longer contexts, and A100s shine where memory headroom and multi-instance partitioning matter. But for many production 8B workloads, well-configured consumer GPUs are a practical alternative with real-world gains, especially on TTFT where user perception lives.

Read on for more details on the benchmark.

Benchmark Objectives

- Evaluate latency and throughput across different GPU classes.

- Determine whether one or multiple consumer-grade GPUs can surpass or match the A100 for small and medium-sized models.

- Provide verifiable results for infrastructure decision-making (cost-effective deployment strategies).

Static Configuration

Test Scenarios

1. Moderate Load (Latency Test)

2. Extreme Load (Throughput Test)

Results & Analysis

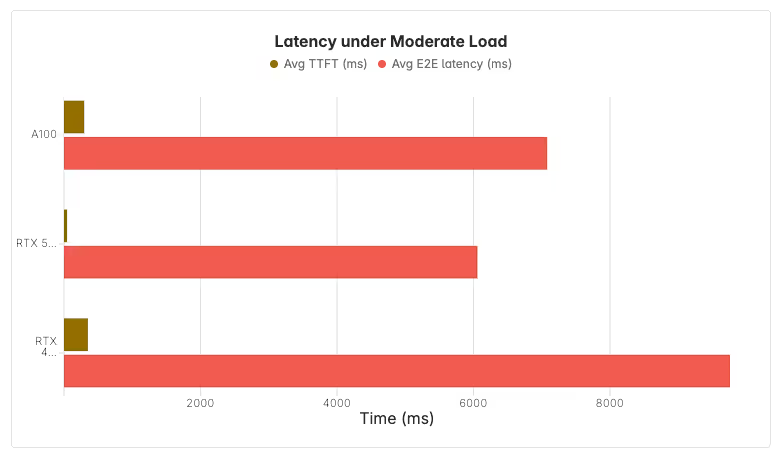

Scenario 1 – Latency under Moderate Load (1 req/s)

All GPUs handle moderate load scenarios effectively. However, the RTX 5090 significantly outperforms all other tested GPUs, including the high-end A100, in all latency categories:

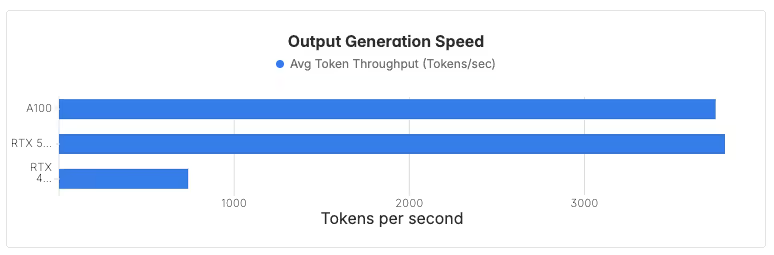

Scenario 2 – Throughput under Extreme Load (1100 req/s)

What this means for you

Across both low-load and high-load inference scenarios with medium-sized model (8B), high-end consumer-grade GPUs demonstrate comparable or superior performance to the A100 datacenter-grade GPU.

- Under moderate load (1 req/s), the RTX 4090 offers latencies close to the A100 performances, and the RTX 5090 delivers superior performances.

- Under extreme load (1100 req/s), the RTX 5090 achieves slightly higher throughput than the A100, while dual RTX 5090s are expected to deliver ~100% more token throughput, respectively.

While the A100 remains advantageous for certain workloads requiring larger VRAM, these results show that for medium-sized models, some consumer-grade GPUs are viable alternatives, especially when cost, and scalability are key considerations.

If you’re deploying small to medium LLMs, a well-configured 5090 — or a small cluster of them — can rival datacenter-grade hardware. You’ll trade some VRAM headroom, but gain serious cost savings and scalability options. For startups, research teams, or anyone who needs high performance without locking into expensive hardware, consumer GPUs are no longer a compromise.